1.2 - Single server or PC: fresh install

These are installation instructions for installing a platform-complete distribution of FOLIO on a PC, including inside a Vagrant box. A single PC installation of FOLIO is useful for demo and testing purposes.

The instructions should also work for a demo / testing installation on a single server. Note all of the instructions mentioning <YOUR_IP_ADDRESS> and <YOUR_HOST_NAME>, and configure them according to your network environment.

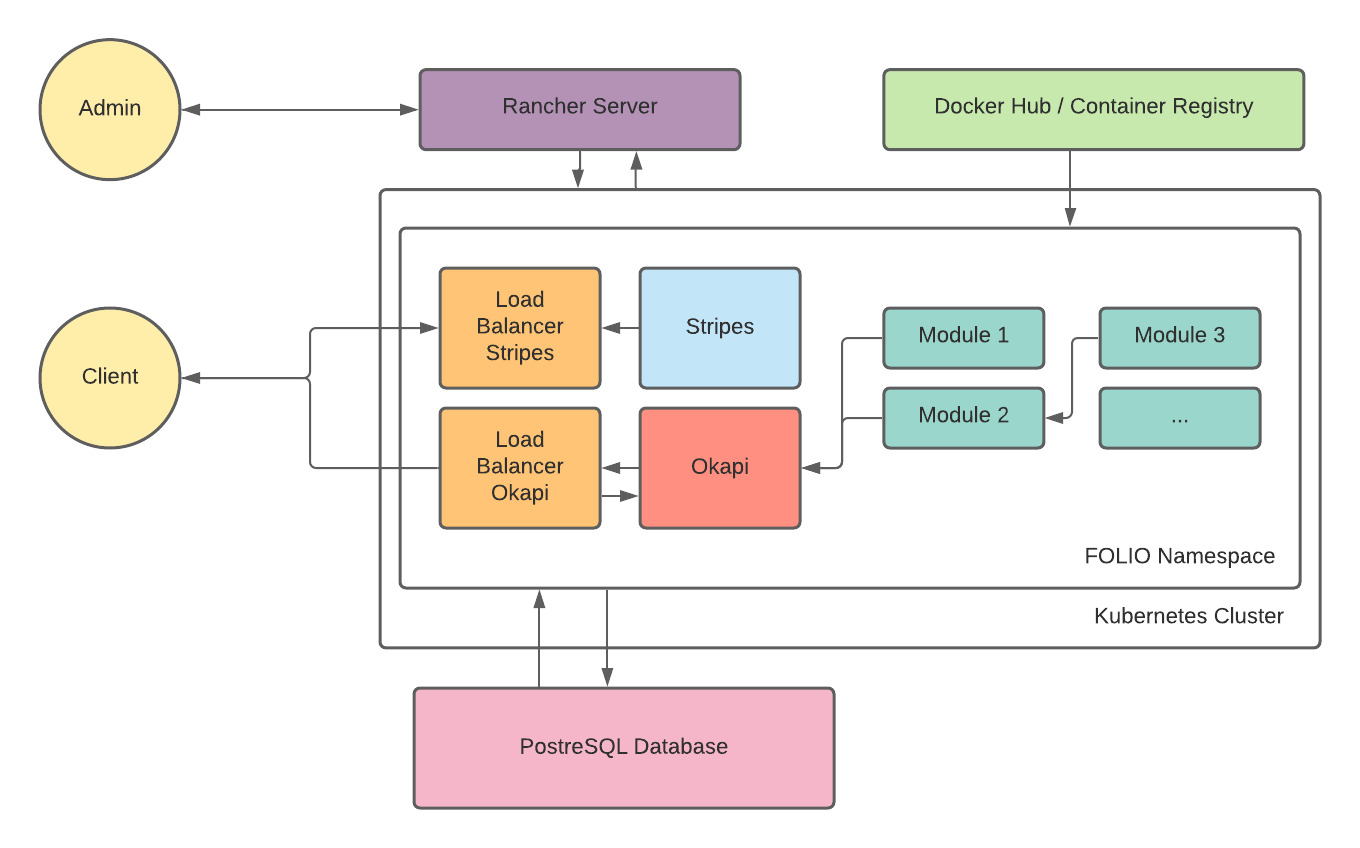

This is not considered appropriate for a production installation. A production installation should distribute the modules over multiple servers and use some kind of orchestration.

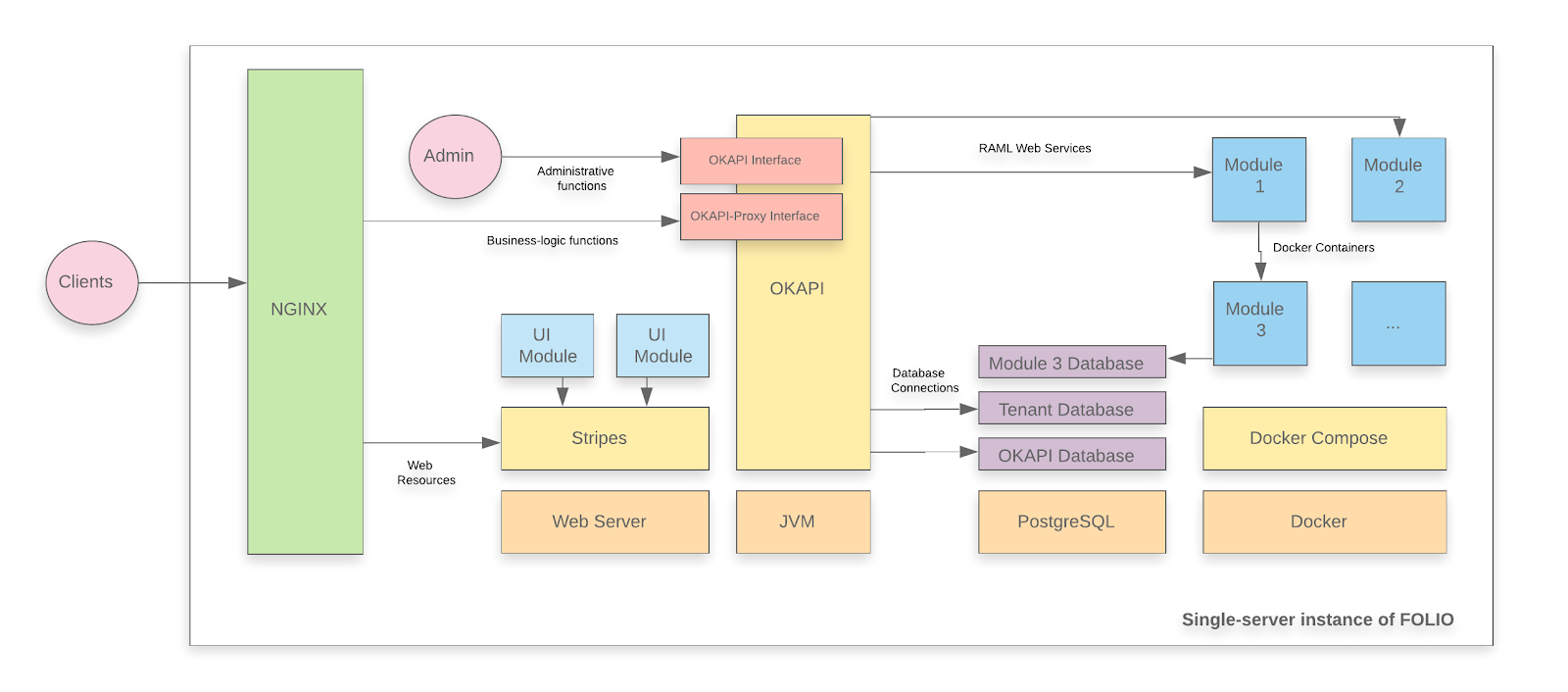

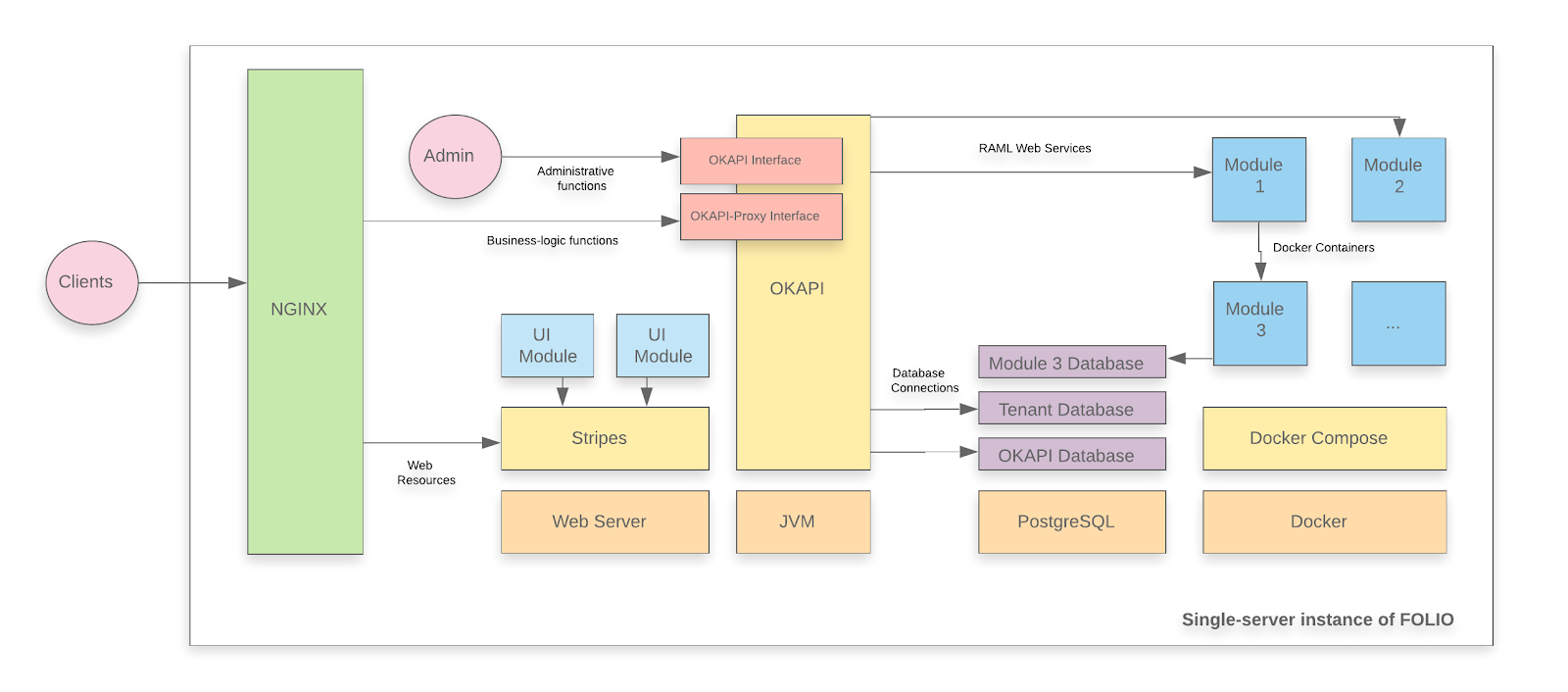

FOLIO Single Server components

A FOLIO instance is divided into two main components. The first component is Okapi, the gateway. The second component is the UI layer which is called Stripes. The single server with containers installation method will install both.

This documentation shows how to install a platform-complete distribution of Lotus.

Throughout this documentation, the sample tenant “diku” will be used. Replace with the name of your tenant, as appropriate.

System requirements

These requirements apply to the FOLIO environment. So for a Vagrant-based install, they apply to the Vagrant VM.

Software requirements

| Requirement |

Recommended Version |

| Operating system |

Ubuntu 20.04.02 LTS (Focal Fossa) 64-bits |

| Java |

OpenJDK 11 |

| PostgreSQL |

PostgreSQL 12 |

Hardware requirements

| Requirement |

FOLIO Base Apps |

FOLIO Extended Apps |

| RAM |

24GB |

40GB |

| CPU |

4 |

8 |

| HD |

100 GB SSD |

350 GB SSD |

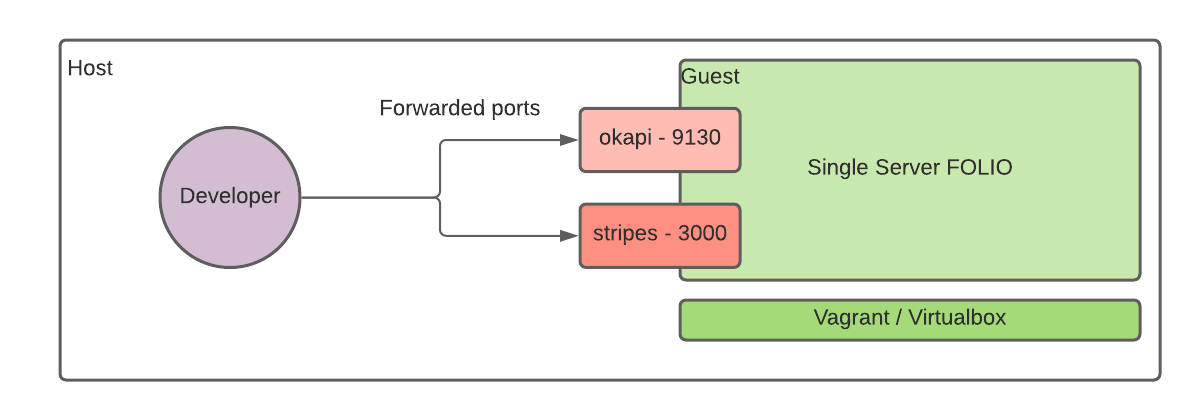

Vagrant setup

For testing FOLIO installation on a PC, it’s recommended to use Vagrant to separate the many FOLIO software components from the host PC, and to allow for saved snapshots and rolling back as needed.

-

Install Vagrant.

See the Vagrant download and installation instructions.

-

Install a virtualization product.

For Windows, install VirtualBox.

-

Install an Ubuntu box.

Create a Vagrantfile like the following.

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "ubuntu/focal64"

config.vm.network "forwarded_port", guest: 9130, host: 9130

config.vm.network "forwarded_port", guest: 80, host: 80

config.vm.network "forwarded_port", guest: 9200, host: 9200

config.vm.provider "virtualbox" do |vb|

vb.memory = "49152"

end

end

Run vagrant up in the folder with the Vagrantfile.

-

SSH into the Vagrant box.

vagrant ssh

For a Vagrant-based installation, all of the following instructions assume you are working within the Vagrant enviornment via vagrant ssh. You will likely want to open additional ssh connections to the box for later steps such as following changes to the Okapi log file.

Installing Okapi

Okapi requirements

-

Update the APT cache.

sudo apt update

-

Install Java 11 and verify that Java 11 is the system default.

sudo apt -y install openjdk-11-jdk

sudo update-java-alternatives --jre-headless --jre --set java-1.11.0-openjdk-amd64

-

Import the PostgreSQL signing key, add the PostgreSQL apt repository, and install PostgreSQL.

wget --quiet -O - https://www.postgresql.org/media/keys/ACCC4CF8.asc | sudo apt-key add -

sudo add-apt-repository "deb http://apt.postgresql.org/pub/repos/apt/ focal-pgdg main"

sudo apt update

sudo apt -y install postgresql-12 postgresql-client-12 postgresql-contrib-12 libpq-dev

-

Configure PostgreSQL to listen on all interfaces and allow connections from all addresses (to allow Docker connections).

- Edit (via sudo) the file /etc/postgresql/12/main/postgresql.conf to add line listen_addresses = ‘*’ under the “Connection Settings” line in the “Connections and Authentication” setting.

- In the same file, increase max_connections (e.g. to 500). Save and close the file.

- Edit (via sudo) the file /etc/postgresql/12/main/pg_hba.conf to add line host all all 0.0.0.0/0 md5

- Restart PostgreSQL with command sudo systemctl restart postgresql

-

Import the Docker signing key, add the Docker apt repository and install the Docker engine.

sudo apt -y install apt-transport-https ca-certificates gnupg-agent software-properties-common

wget --quiet -O - https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

sudo apt update

sudo apt -y install docker-ce docker-ce-cli containerd.io

-

Configure Docker engine to listen on network socket.

-

Create a configuration folder for Docker if it does not exist.

sudo mkdir -p /etc/systemd/system/docker.service.d

-

Create a configuration file /etc/systemd/system/docker.service.d/docker-opts.conf with the following content.

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd -H fd:// -H tcp://127.0.0.1:4243

-

Restart Docker.

sudo systemctl daemon-reload

sudo systemctl restart docker

-

Install docker-compose.

Follow the instructions from official documentation for docker. The instructions may vary depending on the architecture and operating system of your server, but in most cases the following commands will work.

sudo curl -L \

"https://github.com/docker/compose/releases/download/1.27.4/docker-compose-$(uname -s)-$(uname -m)" \

-o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

-

Install Apache Kafka and Apache ZooKeeper. Apache Kafka and Apache ZooKeeper are required by FOLIO mod-pubsub. Both Kafka and ZoopKeepr are installed below using docker-compose.

Take into account that you have to change the KAFKA_ADVERTISED_LISTENERS value for the private IP of your server, instead of 10.0.2.15 for a Vagrant box.

mkdir ~/folio-install

cd folio-install

vim docker-compose-kafka-zk.yml

Insert this content into the file. Change the IP Address in KAFKA_ADVERTISED_LISTENERS to the local IP of your server on which you run Kafka:

version: '2'

services:

zookeeper:

image: wurstmeister/zookeeper

container_name: zookeeper

restart: always

ports:

- "2181:2181"

kafka:

image: wurstmeister/kafka

container_name: kafka

restart: always

ports:

- "9092:9092"

- "29092:29092"

environment:

KAFKA_LISTENERS: INTERNAL://:9092,LOCAL://:29092

KAFKA_ADVERTISED_LISTENERS: INTERNAL://<YOUR_IP_ADDRESS>:9092,LOCAL://localhost:29092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: LOCAL:PLAINTEXT,INTERNAL:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: INTERNAL

KAFKA_AUTO_CREATE_TOPICS_ENABLE: "true"

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_BROKER_ID: 1

KAFKA_LOG_RETENTION_BYTES: -1

KAFKA_LOG_RETENTION_HOURS: -1

Note: The IP address <YOUR_IP_ADDRESS> should match the private IP of your server. This IP address should be reachable from Docker containers. Therefore, you can not use localhost.

- For a Vagrant installation, the IP address should be 10.0.2.15.

You can use the /ifconfig command in order to determine the private IP.

sudo mkdir /opt/kafka-zk

sudo cp ~/folio-install/docker-compose-kafka-zk.yml /opt/kafka-zk/docker-compose.yml

cd /opt/kafka-zk

sudo docker-compose up -d

Create a database and role for Okapi

You will need to create one database in PostgreSQL to persist the Okapi configuration.

-

Log into the PostgreSQL server as a superuser.

sudo su -c psql postgres postgres

-

Create a database role for Okapi and a database to persist Okapi configuration.

CREATE ROLE okapi WITH PASSWORD 'okapi25' LOGIN CREATEDB;

CREATE DATABASE okapi WITH OWNER okapi;

-

Create a database role and database to persist tenant data.

CREATE ROLE folio WITH PASSWORD 'folio123' LOGIN SUPERUSER;

CREATE DATABASE folio WITH OWNER folio;

-

Exit psql with \q command

Once you have installed the requirements for Okapi and created a database, you can proceed with the installation. Okapi is available as a Debian package that can be easily installed in Debian-based operating systems. You only need to add the official APT repository to your server.

-

Import the FOLIO signing key, add the FOLIO apt repository and install okapi.

wget --quiet -O - https://repository.folio.org/packages/debian/folio-apt-archive-key.asc | sudo apt-key add -

sudo add-apt-repository "deb https://repository.folio.org/packages/ubuntu focal/"

sudo apt update

sudo apt-get -y --allow-change-held-packages install okapi=4.13.2-1 # R1-2022 Okapi version

sudo apt-mark hold okapi

Please note that the R1-2022 FOLIO release version of Okapi is 4.13.2-1. If you do not explicitly set the Okapi version, you will install the latest Okapi release. There is some risk with installing the latest Okapi release. The latest release may not have been tested with the rest of the components in the official release.

-

Configure Okapi to run as a single node server with persistent storage.

- Edit (via sudo) file /etc/folio/okapi/okapi.conf to reflect the following changes:

role="dev"

port_end="9340"

host="<YOUR_IP_ADRESS>"

storage="postgres"

okapiurl="http://<YOUR_IP_ADDRESS>:9130"

docker_registries -- See explanation in okapi.conf file. Default is unauthenticated.

log4j_config=“/etc/folio/okapi/log4j2.properties”

Note: The properties postgres_host, postgres_port, postgres_username, postgres_password, postgres_database should be configured in order to match the PostgreSQL configurations made previously.

Edit (via sudo) log4j2.properties. Make sure Okapi logs into a file and define a RollingFileAppender :

appenders = f

appender.f.type = RollingFile

appender.f.name = File

appender.f.fileName = /var/log/folio/okapi/okapi.log

appender.f.filePattern = /var/log/folio/okapi/okapi-%i.log

appender.f.layout.type = PatternLayout

appender.f.layout.pattern = %d{HH:mm:ss} [$${FolioLoggingContext:requestid}] [$${FolioLoggingContext:tenantid}] [$${FolioLoggingContext:userid}] [$${FolioLoggingContext:moduleid}] %-5p %-20.20C{1} %m%n

appender.f.policies.type = Policies

appender.f.policies.size.type = SizeBasedTriggeringPolicy

appender.f.policies.size.size = 200MB

appender.f.strategy.type = DefaultRollOverStrategy

appender.f.strategy.max = 10

rootLogger.level = info

rootLogger.appenderRefs = f

rootLogger.appenderRef.f.ref = File

-

Restart Okapi

sudo systemctl daemon-reload

sudo systemctl restart okapi

The Okapi log is at /var/log/folio/okapi/okapi.log.

-

Pull module descriptors from the central registry.

A module descriptor declares the basic module metadata (id, name, etc.), specifies the module’s dependencies on other modules (interface identifiers to be precise), and reports all “provided” interfaces. As part of the continuous integration process, each module descriptor is published to the FOLIO Registry at https://folio-registry.dev.folio.org.

curl -w '\n' -D - -X POST -H "Content-type: application/json" \

-d '{ "urls": [ "https://folio-registry.dev.folio.org" ] }' \

http://localhost:9130/_/proxy/pull/modules

Okapi log should show something like

INFO ProxyContext 283828/proxy REQ 127.0.0.1:51424 supertenant POST /_/proxy/pull/modules okapi-4.13.2

INFO PullManager Remote registry at https://folio-registry.dev.folio.org is version 4.13.2

INFO PullManager pull smart

...

INFO PullManager pull: 3466 MDs to insert

INFO ProxyContext 283828/proxy RES 200 93096323us okapi-4.13.2 /_/proxy/pull/modules

Okapi is up and running!

Create a new tenant

-

Switch to the working directory.

cd ~/folio-install

-

Create a tenant.json file:

{

"id" : "diku",

"name" : "Datalogisk Institut",

"description" : "Danish Library Technology Institute"

}

-

Post the tenant initialization to Okapi.

curl -w '\n' -D - -X POST -H "Content-type: application/json" \

-d @tenant.json \

http://localhost:9130/_/proxy/tenants

Note: In this installation guide, the Datalogisk Institut is used as an example, but you should use the information for your organization. Take into account that you have to use the id of your tenant in the next steps.

-

Enable the Okapi internal module for the tenant

curl -w '\n' -D - -X POST -H "Content-type: application/json" \

-d '{"id":"okapi"}' \

http://localhost:9130/_/proxy/tenants/diku/modules

Install Elasticsearch

You have to install elasticsearch (ES) in order to be able to do queries. You need to point the related modules, at least mod_pubsub and mod_search to your ES installation (this will be described further down).

Follow this guide to install a three-node Elasticsearch cluster on a Single Server: Installation of Elasticsearch.

Note for completeness: To make use of the full capabilities of FOLIO, it is required to install more services which do not generically belong to FOLIO. For example, if you want to make use of FOLIO’s data export functionality, you have to install a minio Server or make use of an Amazon S3 bucket. The installation of these services and the configuration of FOLIO to connect to these services is not part of this guide. They might be included in later versions of this guide for some commonly employed services.

Install a Folio Backend

-

Post data source information to the Okapi environment for use by deployed modules. The Okapi environment variables will be picked up by every module that makes use of them during deployment. Supply at least these environment variables:

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"DB_HOST\",\"value\":\"<YOUR_IP_ADDRESS>\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"DB_PORT\",\"value\":\"5432\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"DB_DATABASE\",\"value\":\"folio\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"DB_USERNAME\",\"value\":\"folio\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"DB_PASSWORD\",\"value\":\"folio123\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_HOST\",\"value\":\"<YOUR_IP_ADDRESS>\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_PASSWORD\",\"value\":\"s3cret\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_URL\",\"value\":\"http://<YOUR_IP_ADDRESS>:9200\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_USERNAME\",\"value\":\"elastic\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"INITIAL_LANGUAGES\",\"value\":\"eng, ger, swe\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"KAFKA_HOST\",\"value\":\"<YOUR_IP_ADDRESS>\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"KAFKA_PORT\",\"value\":\"9092\"}" http://localhost:9130/_/env;

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"OKAPI_URL\",\"value\":\"http://<YOUR_IP_ADDRESS>:9130\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"SYSTEM_USER_PASSWORD\",\"value\":\"pub-sub\"}" http://localhost:9130/_/env

Note: Make sure that you use your private IP for the properties DB_HOST, KAFKA_HOST and OKAPI_URL. (In a Vagrant environment, 10.0.2.15 should work.) Change passwords as you like, but make sure that you use the same passwords in your installations of the database and elasticsearch. SYSTEM_USER_PASSWORD will be used by mod-pubsub and mod-search. It needs to be the same as those used for the system users system-user, pub-sub and mod-search (and potentially more system generated users).

Set the ELASTICSEARCH_* variables so that they point to your Elasticsearch installation.

You may at this point also want to set environment variables for modules which are not part of Okapi’s global env vars. Follow these instructions Change Environment Variables of a Module (cf. the section named “When the module has not yet been deployed”).

Confer the module documentations on github to learn about configuration options for the modules by setting environment variables. For example, for mod-search, look at https://github.com/folio-org/mod-search#environment-variables .

You can also find a list of environment variables for each module at the Overview - Metadata section of the module’s page in Folio org’s Dockerhub. For example, for mod-search, this is at https://hub.docker.com/r/folioorg/mod-search.

-

Check out platform-complete.

cd $HOME

git clone https://github.com/folio-org/platform-complete

cd platform-complete

- Checkout the latest stable branch of the repository (one which has undergone bugfest or hotfix testing)

git checkout R1-2022-hotfix-2

-

Deploy and enable the backend modules.

Deploy the backend modules

Deploy all backend modules of the release with a single post to okapi’s install endpoint. This will deploy and enable all backend modules. Start with a simulation run:

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d @$HOME/platform-complete/okapi-install.json http://localhost:9130/_/proxy/tenants/diku/install?simulate=true\&preRelease=false

The system will show you what it will do. It will also enable dependent frontend modules (which may not be of a release version).

Now, actually deploy and enable the backend modules.

Note: Edit the following to “loadSample%3Dtrue” instead if you want to load some reference data (this will populate your inventory with sample data, which might be unwanted if you want to later migrate your own inventory data):

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d @$HOME/platform-complete/okapi-install.json http://localhost:9130/_/proxy/tenants/diku/install?deploy=true\&preRelease=false\&tenantParameters=loadReference%3Dtrue%2CloadSample%3Dfalse

This will pull the Docker images from Docker Hub and spin up a container on your host for each backend module.

Progress can be followed in the Okapi log at /var/log/folio/okapi/okapi.log

This will run for 15 minutes or so.

If that fails, remedy the error cause and try again until the post succeeds.

Check, what is in your Discovery:

curl -w '\n' -D - http://localhost:9130/_/discovery/modules | grep srvcId

There should be 65 modules in your Okapi discovery - those which are in okapi-install.json - if all went well.

Check, what Docker containers are running on your host:

sudo docker ps --all | grep mod- | wc

This should also show the number 65.

Get a list of backend modules that have now been enabled for your tenant:

curl -w '\n' -XGET http://localhost:9130/_/proxy/tenants/diku/modules | grep mod- | wc

There should be 65 of these as well.

Now you have installed a complete FOLIO backend.

Congratulations !

The backend of the new tenant is ready.

Now, you have to set up a Stripes instance for the frontend of the tenant, create a superuser for the tenant and optionally secure Okapi.

Install the frontend, Folio Stripes

You have an Okapi instance running, you can proceed to install Stripes. Stripes is bundled and deployed on a per tenant basis.

Install Stripes and nginx in a Docker container. Use the docker file of platform-complete.

cd ~/platform-complete

edit docker/Dockerfile

ARG OKAPI_URL=http(s)://<YOUR_DOMAIN_NAME>/okapi

ARG TENANT_ID=diku # Or change to your tenant's name

<YOUR_DOMAIN_NAME> is usually your server name (host name plus domain), unless you are doing a redirect from some other domain. The subpath /okapi of your domain name will be redirected to port 9130 below, in your nginx configuration. Thus, the Okapi port 9130 does not need to be released to outside of your network.

Edit docker/nginx.conf to include this content below. Replace the server name and IP address with what is in the original version of nginx.conf:

server {

listen 80;

server_name <YOUR_SERVER_NAME>;

charset utf-8;

access_log /var/log/nginx/host.access.log combined;

# front-end requests:

# Serve index.html for any request not found

location / {

# Set path

root /usr/share/nginx/html;

index index.html index.htm;

include mime.types;

types {

text/plain lock;

}

try_files $uri /index.html;

}

# back-end requests:

location /okapi {

rewrite ^/okapi/(.*) /$1 break;

proxy_pass http://<YOUR_IP_ADDRESS>:9130/;

}

}

<YOUR_SERVER_NAME> should be the real name of your server in your network. <YOUR_SERVER_NAME> should consist of host name plus domain name, e.g. myserv.mydomain.edu. If you are not doing a redirect <YOUR_SERVER_NAME> equals to <YOUR_DOMAIN_NAME>.

Note: If you want to host multiple tenants on a server, you can configure NGINX to either open a new port for each tenant or set up different paths on the same port (e.g. /tenat1, /tenant2).

Edit the url and tenant in stripes.config.js. The url will be requested by a FOLIO client, thus a browser. Make sure that you use the public IP or domain of your server. Use http only if you want to access your FOLIO installation only from within your network.

edit stripes.config.js

okapi: { 'url':'http(s)://<YOUR_DOMAIN_NAME>/okapi', 'tenant':'diku' },

You might also edit branding in stripes.config.js, e.g. add your own logo and favicon as desired. Edit these lines:

branding: {

logo: {

src: './tenant-assets/mybib.gif',

alt: 'My Folio Library',

},

favicon: {

src: './tenant-assets/mybib_icon.gif'

},

}

Build the Docker container

Build the docker container which will contain stripes and nginx:

sudo su

docker build -f docker/Dockerfile --build-arg OKAPI_URL=http://<YOUR_DOMAIN_NAME>/okapi --build-arg TENANT_ID=diku -t stripes .

Sending build context to Docker daemon 1.138GB

Step 1/19 : FROM node:15-alpine as stripes_build

...

Step 19/19 : ENTRYPOINT ["/usr/bin/entrypoint.sh"]

---> Running in a47dce4e3b3e

Removing intermediate container a47dce4e3b3e

---> 48a532266f21

Successfully built 48a532266f21

Successfully tagged stripes:latest

This will run for approximately 15 minutes.

Make sure nginx is not already running on your VM

sudo service nginx stop

You will get an error if it was not already running, which is fine.

Make sure nothing else is running on port 80.

sudo apt install net-stat

netstat -anpe | grep ":80"

You should get no results.

Start the Docker container

Redirect port 80 from the outside to port 80 of the docker container. (When using SSL, port 443 has to be redirected.)

nohup docker run --name stripes -d -p 80:80 stripes

(Optionally) Log in to the Docker container

Check if your config file looks o.k. and follow the access log inside the container:

docker exec -it <container_id> sh

vi /etc/nginx/conf.d/default.conf

tail -f /var/log/nginx/host.access.log

Exit sudo

exit

Enable the frontend modules for your tenant

Use the parameter deploy=false of Okapi’s install endpoint for the frontend modules and post the list of frontend modules stripes-install.json to the install endpoint. This will enable the frontend modules of the release version for your tenant.

First, simulate what will happen:

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d @$HOME/platform-complete/stripes-install.json http://localhost:9130/_/proxy/tenants/diku/install?simulate=true\&preRelease=false

Then, enable the frontend modules for your tenant:

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d @$HOME/platform-complete/stripes-install.json http://localhost:9130/_/proxy/tenants/diku/install?deploy=false\&preRelease=false\&tenantParameters=loadReference%3Dfalse

Get a list of modules which have been enabled for your tenant:

curl -w '\n' -XGET http://localhost:9130/_/proxy/tenants/diku/modules | grep id | wc

There should be 131 modules enabled.

This number is the sum of the following:

56 Frontend modules (folio_*)

9 Edge modules

65 Backend modules (R1-2022) (mod-*)

1 Okapi module (4.13.2)

These are all R1 (Lotus) modules.

You have installed all modules now. Check again what containers are running in docker:

sudo docker ps --all | wc

This should show 72 containers running.

The following containers are running on your system, but do not contain backend modules:

- Stripes

- 3 times Elasticsearch

- Kafka

- Zookeper

In sum, these are 6 containers which do not run backend modules. Also subtract the header line (of “docker ps”), and you will arrive at

72 - 7 = 65 containers which run backend modules .

Create a superuser

You need to create a superuser for your tenant in order to be able to administer it. This is a multi step process and the details can be found in the Okapi documentation. You can use a PERL script to execute these steps automatically. You only need to provide the tenant id, a username/password for the superuser and the URL of Okapi.

Install gcc on Ubuntu 20 (prerequisite to install Perl modules from cpan)

sudo apt install gcc

gcc --version

gcc (Ubuntu 9.3.0-17ubuntu1~20.04) 9.3.0

sudo apt install make

Install prerequiste Perl modules

sudo cpan install LWP.pm

sudo cpan install JSON.pm

sudo cpan install UUID::Tiny

Use the bootstrap-superuser.pl Perl script to create a superuser:

wget "https://raw.githubusercontent.com/folio-org/folio-install/master/runbooks/single-server/scripts/bootstrap-superuser.pl"

perl bootstrap-superuser.pl \

--tenant diku --user diku_admin --password admin \

--okapi http://localhost:9130

Now Stripes is running on port 80 (or 443, if you configured SSL) and you can open it using a browser.

Go to http(s)://<YOUR_HOST_NAME>/.

Log in with the credentials of the superuser that you have created.

Create Elasticsearch Index

Note : You might want to defer creating the ES index to a point of time after you have migrated some data to your freshly created FOLIO instance. If you have loaded sample data above and do not plan to migrate data, then you should create the index now.

[Note aside : This section follows https://github.com/folio-org/mod-search#recreating-elasticsearch-index ]

Create Elasticsearch index for the first time

Assign the following permission to user diku_admin:

search.index.inventory.reindex.post (Search - starts inventory reindex operation)

Use the “Users” app of the UI.

Get a new Token:

export TOKEN=$( curl -s -S -D - -H "X-Okapi-Tenant: diku" -H "Content-type: application/json" -H "Accept: application/json" -d '{ "tenant" : "diku", "username" : "diku_admin", "password" : "admin" }' http://localhost:9130/authn/login | grep -i "^x-okapi-token: " )

curl -w '\n' -D - -X POST -H "$TOKEN" -H "X-Okapi-Tenant: diku" -H "Content-type: application/json" -d '{ "recreateIndex": true, "resourceName": "instance" }' http://localhost:9130/search/index/inventory/reindex

HTTP/1.1 200 OK

vary: origin

Content-Type: application/json

Date: Fri, 22 Jul 2022 19:00:00 GMT

transfer-encoding: chunked

{"id":"02c8e76a-0606-43f2-808e-86f3c48b65c6","jobStatus":"In progress","submittedDate":"2022-07-22T19:00:00.000+00:00"}

Follow okapi.log. You will see a lot of logging:

/inventory-view RES 200 mod-inventory-storage …

Posting to the endpoint /search/index/inventory/reindex causes actions on all 3 elasticsearch containers (nodes).

Indexing of 200,000 instances takes 5-6 minutes.

Monitoring the reindex process

(This section follows https://github.com/folio-org/mod-search#monitoring-reindex-process .)

There is no end-to-end monitoring implemented yet, however it is possible to monitor it partially. In order to check how many records published to Kafka topic use inventory API. Instead of the id “02c8e76a-0606-43f2-808e-86f3c48b65c6” use the id that has been reported by your post to /search/index/inventory/reindex above:

curl -w '\n' -D - -X GET -H "$TOKEN" -H "X-Okapi-Tenant: diku" -H "Content-type: application/json" http://localhost:9130/instance-storage/reindex/02c8e76a-0606-43f2-808e-86f3c48b65c6

HTTP/1.1 200 OK

vary: origin

Content-Type: application/json

transfer-encoding: chunked

{

"id" : "02c8e76a-0606-43f2-808e-86f3c48b65c6",

"published" : 224823,

"jobStatus" : "Ids published",

"submittedDate" : "2022-07-22T19:15:00.000+00:00"

}

Compare the number “published” to the number of instance records that you have actually loaded or migrated to your FOLIO inventory.

Confirm that FOLIO is running

Log in to your frontend: E.g., go to http://<YOUR_HOST_NAME>/ in your browser.

Can you see the installed modules in Settings - Installation details ?

Do you see the right okapi version, 4.13.2-1 ?

Does everything look good ?

Il sistema è pronto !

1.3 - Single server with containers

A single server installation is being considered a non-production installation. For a production installation some kind of orchestration should be applied. A single server installation of FOLIO is useful for demo and testing purposes.

A FOLIO instance is divided into two main components. The first component is Okapi, the gateway. The second component is the UI layer which is called Stripes. The single server with containers installation method will install both.

Changes in the documentation

There are some changes in the idea of this documentation as compared to the documentations for previous releases (cf. Juniper or Iris documentations).

- This is a documentation for an upgrade of your FOLIO system. It assumes that you have already successfully installed Juniper (GA or a Juniper hotfix release) and now want to upgrade your system to Kiwi. If you are deploying FOLIO for the first time, or if you want to start with a fresh installation for whatever reasons, go to the Juniper single server documentation and come back here when you have installed Juniper.

- This documentation assumes that you have installed the platform-complete distribution and want to upgrade the modules of that distribution. Previous release documentations have been written for the more concise “platform-core” distribution. The platform-core distribution is not being supported, anymore.

System requirements

Software requirements

| Requirement |

Recommended Version |

| Operating system |

Ubuntu 20.04.02 LTS (Focal Fossa) 64-bits |

| FOLIO system |

Juniper (R2-2021) |

Hardware requirements

| Requirement |

FOLIO Extended Apps |

| RAM |

40GB |

| CPU |

8 |

| HD |

350 GB SSD |

I. Before the Upgrade

First do Ubuntu Updates & Upgrades

sudo apt-get update

sudo apt-get upgrade

sudo reboot

Check if all Services have been restarted after reboot: Okapi, postgres, docker, the docker containers (do: docker ps –all | more ). Stripes and nginx (you have started these in one container if you have followed the Juniper docs. Re-start this container).

The following actions in this section have been taken from the Kiwi Release Notes. There might be more actions that you might need to take for the upgrade of your FOLIO installation. If you are unsure what other steps you might need to take, study the Release Notes.

i. Change all duplicate item barcodes

Item barcode is unique now. Duplicate item barcodes fail the upgrade.

Before upgrade: Change all duplicate item barcodes. Find them with this SQL:

pslq folio

SET search_path TO diku_mod_inventory_storage;

SELECT lower(jsonb->>'barcode')

FROM item

GROUP BY 1

HAVING count(*) > 1;

lower

-------

(0 rows)

Change “diku” to the name of your tenant. Use Inventory Item Barcode search to edit the duplicate barcode.

ii. Change all holdings sources to FOLIO

Holdings created by a MARC Bib, not a MARC Holdings, showed the source = MARC. The behavior was changed to show source = FOLIO for such holdings. Database tables might contain holdings records with incorrect source value. See here MODSOURMAN-627 - Script for retrieving holding by specific conditions.

Log in to your postgres database (on linux console, type “psql -U folio folio”) and select the Holdings where source name is not FOLIO or MARC :

SET search_path TO diku_mod_inventory_storage;

SELECT *

FROM holdings_record

WHERE holdings_record.jsonb ->> 'sourceId' = (

SELECT id::text

FROM holdings_records_source

WHERE holdings_records_source.jsonb ->> 'name' != 'FOLIO' AND

holdings_records_source.jsonb ->> 'name' != 'MARC');

(0 rows)

(END)

Replace ${tenant} by the name of your tenant. On a standard (test or demo) install, the tenant is “diku”.

If the holdings source is anything other than FOLIO or MARC (e.g. -), then change it to FOLIO.

iii. Install Elasticsearch

If you have not already done so in your Juniper Install (it was optional there) install Elasticsearch now in your running Juniper instance. Follow this guide to install a 3-node Elasticsearch cluster on a Single Server: Installation of Elasticsearch. This will also install mod-search and the frontend modules folio_inventory-es and folio_search in Juniper.

iv.Install a minIO-Server

If you want to use Data Export, you either have to use Amazon S3 or a minIO server.

So, for anyone who plans to use MinIO server instead of Amazon S3:

External storage for generated MARC records should be configured to MinIO server by changing ENV variable AWS_URL.

Installation of a MinIO server is not being covered in this documentation. Refer to:

MinIO Deployment and Management ,

Deploy MinIO Standalone .

v. More preparatory steps

There might be more preparatory steps that you need to take for your installation. If you are unsure what other steps you might need to take, study the Kiwi Release Notes.

II. Main Processing: Upgrade Juniper => Kiwi

This documentation assumes that you have Juniper Hotfix#3 running. Upgrade procedures for other Hotfixes or the GA Release might vary slightly. In particular, if this documentation refers to Juniper Release module versions, check if you have exactly that version running and if not, use the version that you had deployed.

II.i. Upgrade the Okapi Version / Restart Okapi

This needs to be done first, otherwise Okapi can not pull the new modules.

Read the Okapi Release Version from the platform-complete/install.json file.

- Clone the repository, change into that directory:

git clone https://github.com/folio-org/platform-complete

cd platform-complete

git fetch

There is a new Branch R3-2021-hotfix-2. We will deploy this version.

Check out this Branch.

Stash local changes. This should only pertain to stripes.config.js .

Discard any changes which you might have made in Juniper on install.json etc.:

git restore install.json

git restore okapi-install.json

git restore stripes-install.json

git restore package.json

git stash save

git checkout master

git pull

git checkout R3-2021-hotfix-2

git stash pop

Read the R3 Okapi version from install.json: okapi-4.11.1

Fetch Okapi as a Debian package from repository.folio.org .

Import the FOLIO signing key, add the FOLIO apt repository, install okapi (of this release):

wget --quiet -O - https://repository.folio.org/packages/debian/folio-apt-archive-key.asc | sudo apt-key add -

sudo add-apt-repository "deb https://repository.folio.org/packages/ubuntu focal/"

sudo apt-get update

sudo apt-get -y --allow-change-held-packages install okapi=4.11.1-1

Start Okapi in cluster mode

I install Okapi in cluster mode, because this is the appropriate way to install it in a production environment (although it is being done on a single server here, this procedure could also be applied to a multi-server environment). Strictly speaking, on a single server, it is not necessary to deploy Okapi in cluster mode. So you might want to stay with the default role “dev”, instead of “cluster”.

Change the port range in okapi.conf . Compared to Juniper, this needs to be done now, because Elasticsearch will occupy ports 9200 and 9300 (or is already occupying them) :

vim /etc/folio/okapi/okapi.conf

# then change the following lines to:

- role="cluster"

- cluster_config="-hazelcast-config-file /etc/folio/okapi/hazelcast.xml"

- cluster_port="9001"

- port_start="9301"

- port_end="9520"

- host="10.X.X.X" # change to your host's IP address

- nodename="10.X.X.X"

You can find out the node name that Okapi uses like this: curl -X GET http://localhost:9130/_/discovery/nodes . You have to use that value if you deploy Okapi in cluster mode. Not your host name and not “localhost”.

Edit interface and members in hazelcast.xml (if you deploy Okapi in cluster mode). If you run Okapi on a single server, this is your local IP address.

vim /etc/folio/okapi/hazelcast.xml

...

<tcp-ip enabled="true">

<interface>10.X.X.X</interface> # replace by your host's IP address

<member-list>

<member>10.X.X.X</member> # replace by your host's IP address

</member-list>

</tcp-ip>

Send new Environment Variables for Hazelcast to Okapi (in case you haven’t been using Hazelcast so far):

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"OKAPI_CLUSTERHOST\",\"value\":\"10.X.X.X\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"HAZELCAST_IP\",\"value\":\"10.X.X.X\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"HAZELCAST_PORT\",\"value\":\"5701\"}" http://localhost:9130/_/env

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"HAZELCAST_FILE\",\"value\":\"/etc/folio/okapi/hazelcast.xml\"}" http://localhost:9130/_/env

Restart Okapi:

sudo systemctl daemon-reload

sudo systemctl restart okapi.service

Follow /var/log/folio/okapi/okapi.log . You should read something like this:

INFO DeploymentManager shutdown

...

Now Okapi will re-start your modules. Follow the okapi.log. It will run for 2 or 3 minutes. Check if all modules are running:

docker ps --all | grep "mod-" | wc

62

Retrieve the list of modules which are now being enabled for your tenant (just for your information):

curl -w '\n' -XGET http://localhost:9130/_/proxy/tenants/diku/modules

...

}, {

"id" : "okapi-4.11.1"

} ]

You should see 9 Edge modules (if you have started from Juniper HF#3; if you have started from Juniper-GA, you will see only 8 Edge modules), 52 Frontend modules (folio_*), 62 Backend modules (mod-*) (These are the modules of Juniper, platform-complete) + the Kiwi-Version of Okapi (4.11.1).

II.ii. Pull module descriptors from the central registry

A module descriptor declares the basic module metadata (id, name, etc.), specifies the module’s dependencies on other modules (interface identifiers to be precise), and reports all “provided” interfaces. As part of the continuous integration process, each Module Descriptor is published to the FOLIO Registry at https://folio-registry.dev.folio.org.

curl -w '\n' -D - -X POST -H "Content-type: application/json" \

-d { "urls": [ "https://folio-registry.dev.folio.org" ] http://localhost:9130/_/proxy/pull/modules

Okapi log should show something like

INFO ProxyContext 283828/proxy REQ 127.0.0.1:51424 supertenant POST /_/proxy/pull/modules okapi-4.11.1

INFO PullManager Remote registry at https://folio-registry.dev.folio.org is version 4.11.1

INFO PullManager pull smart

...

INFO PullManager pull: 3466 MDs to insert

INFO ProxyContext 283828/proxy RES 200 93096323us okapi-4.11.1 /_/proxy/pull/modules

II.iii. Deploy a compatible FOLIO backend

- Post data source information to the Okapi environment for use by deployed modules

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_HOST\",\"value\":\"10.X.X.X\"}" http://localhost:9130/_/env;

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_URL\",\"value\":\"http://10.X.X.X:9200\"}" http://localhost:9130/_/env;

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_USERNAME\",\"value\":\"elastic\"}" http://localhost:9130/_/env;

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"ELASTICSEARCH_PASSWORD\",\"value\":\"s3cret\"}" http://localhost:9130/_/env; # Use the password that you have chosen for Elasticsearch above !!

curl -w '\n' -D - -X POST -H "Content-Type: application/json" -d "{\"name\":\"INITIAL_LANGUAGES\",\"value\":\"eng, ger, swe\"}" http://localhost:9130/_/env; # replace by the language codes for the languages that you need to support

Change 10.X.X.X to your local IP address. You can choose up to five INITIAL_LANGUAGES. To find out the language codes, view here mod-search: multi language search support .

The Okapi environment should now look something like this:

curl -X GET http://localhost:9130/_/env

[ {

"name" : "DB_DATABASE",

"value" : "folio"

}, {

"name" : "DB_HOST",

"value" : "10.X.X.X"

}, {

"name" : "DB_PASSWORD",

"value" : "folio123"

}, {

"name" : "DB_PORT",

"value" : "5432"

}, {

"name" : "DB_USERNAME",

"value" : "folio"

}, {

"name" : "ELASTICSEARCH_HOST",

"value" : "10.X.X.X"

}, {

"name" : "ELASTICSEARCH_PASSWORD",

"value" : "s3cret"

}, {

"name" : "ELASTICSEARCH_URL",

"value" : "http://10.X.X.X:9200"

}, {

"name" : "ELASTICSEARCH_USERNAME",

"value" : "elastic"

}, {

"name" : "HAZELCAST_FILE",

"value" : "/etc/folio/okapi/hazelcast.xml"

}, {

"name" : "HAZELCAST_IP",

"value" : "10.X.X.X"

}, {

"name" : "HAZELCAST_PORT",

"value" : "5701"

}, {

"name" : "INITIAL_LANGUAGES",

"value" : "eng, ger, swe"

}, {

"name" : "KAFKA_HOST",

"value" : "10.X.X.X"

}, {

"name" : "KAFKA_PORT",

"value" : "9092"

}, {

"name" : "OKAPI_CLUSTERHOST",

"value" : "10.X.X.X"

}, {

"name" : "OKAPI_URL",

"value" : "http://10.X.X.X:9130"

}, {

"name" : "SYSTEM_USER_PASSWORD",

"value" : "pub-sub"

} ]

If you chose a different SYSTEM_USER_PASSWORD than the default (which you should do on a production system), then you will have to change the password of the user “pub-sub” in the system to the same value. Change the password of the user pub-sub via Settings - Passwords menu. Attention: If you change the password of the User pub-sub, then you will have to undeploy and re-deploy mod-pubsub ! Just upgrading mod-pubsub will not be enough. Otherwise, the cirulation log won’t work.

- Deploy the backend modules

Sidestep: Look, how many containers are running already now:

sudo docker ps | grep -v “^CONTAINER” | wc -l

68 containers are running:

- 62 Backend Modules of R2-2021

- Stripes with nginx

- 3 Nodes Elasticsearch

- Kafka & Zookeeper

2.1. Upgrade and enable mod-pubsub

First do a simulation run:

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d '[ { "id" : "mod-pubsub-2.4.3", "action" : "enable" } ]' http://localhost:9130/_/proxy/tenants/diku/install?simulate=true

The deploy the module and enable it for the tenant (replace “diku” by the name of your tenant):

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d '[ { "id" : "mod-pubsub-2.4.3", "action" : "enable" } ]' http://localhost:9130/_/proxy/tenants/diku/install?deploy=true\&preRelease=false\&tenantParameters=loadReference%3Dtrue

HTTP/1.1 200 OK

The old module instance, mod-pubsub-2.3.3, has been automatically undeployed by this operation.

2.2 Deploy all the other backend modules

Remove mod-pubsub-2.4.3 from the list ~/platform-complete/okapi-install.json because it has already been deployed.

Deploy all backend modules with this single script deploy-all-backend-modules.sh . You will also need this script deploy-backend-module.sh :

This will download all the necessary container images for the backend modules from Docker Hub and deploy them as containers to the local system :

./deploy-all-backend-modules.sh ~/platform-complete/okapi-install.json <YOUR_IP_ADDRESS>

This script will run for approx. 15 minutes. It will spin up one Docker container for each backend module using Okapi’s /discovery/modules endpoint.

You can follow the progress on the terminal screen and/or in /var/lib/folio/okapi/okapi.log .

Now the R3 (Kiwi) backend modules have been deployed, but have not yet been enabled for your tenant.

In addition, the R2 (Juniper) containers are still running on your system. The latter are enabled. This means that, right now, the system is still in the state “R2-2021 (Juniper)”, except for Okapi (but the Okapi is downward compatible to R2) and mod-pubsub.

There are 61 backend modules of R2 (all, except for mod-pubsub) and 65 backend modules of R3 running as containers on your system. Check this by typing

docker ps --all | grep "mod-" | wc

126

Side Remark: Three of the backend modules have been deployed twice in the same version, because for those backend modules, the R2 version is the same as the R3 version. These modules are:

mod-service-interaction:1.0.0

mod-graphql:1.9.0

mod-z3950-2.4.0

.

II.iv. Enable the modules for your tenant

Remove mod-pubsub-2.4.3 and okapi from ~/platform-complete/install.json because they have already been enabled.

Enable frontend and backend modules in a single post. But first, do a simulate run. Don’t deploy the modules because they have already been deployed (use the parameter deploy=false). Load reference data of the modules, but no sample data. If you don’t load reference data for the new modules, you might not be able to utilize the modules properly.

First, do a simulate run (replace “diku” by the name of your tenant):

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d @/usr/folio/platform-complete/install.json http://localhost:9130/_/proxy/tenants/diku/install?simulate=true\&preRelease=false

Then, do enable the modules:

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d @/usr/folio/platform-complete/install.json http://localhost:9130/_/proxy/tenants/diku/install?deploy=false\&preRelease=false\&tenantParameters=loadReference%3Dtrue

...

HTTP/1.1 100 Continue

HTTP/1.1 200 OK

Side Remark: folio_inventory-es-6.4.0, a frontend module POC for using mod-inventory with Elasticsearch, has been kept and even updated. But in Kiwi, you won`t need it, because there, mod-inventory and folio_inventory work with Elasticsearch, anyway. Therefore, you might want to disable folio_inventory-es-6.4.0.

Just for your information: Look, how many backend modules have now been deployed on your server:

curl -w '\n' -D - http://localhost:9130/_/discovery/modules | grep srvcId | wc

128

The number 128 includes 5 Edge modules. Another important information: Get a list of modules (frontend + backend) that have now been enabled for your tenant:

curl -w '\n' -XGET http://localhost:9130/_/proxy/tenants/diku/modules | grep id | wc

132

This number is the sum of the following:

- 56 Frontend modules (including folio_inventory-es)

- 10 Edge modules

- 65 Backend modules (R3-2021)

- 1 Okapi module (4.11.1)

These are all R3 (Kiwi) modules.

The backend of the new tenant is ready. Now, you have to set up a new Stripes instance for the frontend of the tenant.

Install Stripes and nginx in a Docker container. Use the docker file of platform-complete. Change OKAPI_URL to your server name or SAN - usually the name for which you have an SSL certificate. Change the TENANT_ID to your tenant ID:

cd ~/platform-core

edit docker/Dockerfile

ARG OKAPI_URL=http(s)://<YOUR_DOMAIN_NAME>/okapi

ARG TENANT_ID=diku # Or change to your tenant's name

Use https if possible, i.e. use an SSL certificate. <YOUR_DOMAIN_NAME> should then be the fully qualified domain name (FQDN) for which your certificate is valid, or a server alias name (SAN) which applies to your certificate. If using http, it is your server name (host name plus domain). The subpath /okapi of your domain name will be redirected to port 9130 below, in your nginx configuration.

Configure webserver to serve Stripes webpack: Edit docker/nginx.conf. Place your local server name – this is not necessarily equal to the server name for which you have an SSL certificate ! – in server_name and use your IP address in proxy_pass :

edit docker/nginx.conf

server {

listen 80;

server_name <YOUR_SERVER_NAME>;

charset utf-8;

access_log /var/log/nginx/host.access.log combined;

# front-end requests:

# Serve index.html for any request not found

location / {

# Set path

root /usr/share/nginx/html;

index index.html index.htm;

include mime.types;

types {

text/plain lock;

}

try_files $uri /index.html;

}

# back-end requests:

location /okapi {

rewrite ^/okapi/(.*) /$1 break;

proxy_pass http://<YOUR_IP_ADDRESS>:9130/;

}

}

<YOUR_SERVER_NAME> should be the real name of your server in your network. <YOUR_SERVER_NAME> should consist of host name plus domain name, e.g. myserv.mydomain.edu.

Edit the url and tenant in stripes.config.js. The url will be requested by a FOLIO client, thus a browser. Make sure that you use the public IP or domain of your server. Use http only if you want to access your FOLIO installation only from within your network.

edit stripes.config.js

okapi: { 'url':'http(s)://<YOUR_DOMAIN_NAME>/okapi', 'tenant':'diku' },

You might also edit branding in stripes.config.js, e.g. add your own logo and favicon as desired. Edit these lines:

branding: {

logo: {

src: './tenant-assets/mybib.gif',

alt: 'My Folio Library',

},

favicon: {

src: './tenant-assets/mybib_icon.gif'

},

}

Finally, build the docker container which will contain Stripes and nginx :

sudo su

docker build -f docker/Dockerfile --build-arg OKAPI_URL=http(s)://<YOUR_DOMAIN_NAME>/okapi --build-arg TENANT_ID=diku -t stripes .

Sending build context to Docker daemon 1.138GB

Step 1/19 : FROM node:15-alpine as stripes_build

...

Step 19/19 : ENTRYPOINT ["/usr/bin/entrypoint.sh"]

---> Running in a47dce4e3b3e

Removing intermediate container a47dce4e3b3e

---> 48a532266f21

Successfully built 48a532266f21

Successfully tagged stripes:latest

This will run for approximately 15 minutes.

Stop the old Stripes container: docker stop

Completely free your port 80. Look if something is still running there: e.g., do

netstat -taupn | grep 80

If there should be something still running on port 80, kill these processes.

Start the stripes container:

Redirect port 80 from the outside to port 80 of the docker container:

nohup docker run -d -p 80:80 stripes

Log in to your frontend: E.g., go to http://<YOUR_HOST_NAME>/ in your browser.

-

Can you see the R3 modules in Settings - Installation details ?

-

Do you see the right okapi version, 4.11.1-1 ?

-

Does everything look good ?

If so, remove the old stripes container: docker rm <container id of your old stripes container> .

II.vi. Cleanup

Clean up. Undeploy all unused containers.

In general, all R2 modules have to be removed now. But some care has to be taken.

a) If you have more than one tenant on your server, some tenants may still need R2 modules ! Don’t delete those R2 modules. Check also for your supertenant; did you enable anything else than okapi for it ?

b) Sometimes, R2 versions are the same as R3 versions. In this case, you will have two containers of the same module version running on your system (because we have deployed it twice). You might just leave it as is. Okapi will do some kind of round robin between the two module instances.

But if you decide to delete (=undeploy) one of those containers from Okapi’s discovery, you should disable and re-enable the module for your tenants, afterwards.

Create a list of containers which are in your okapi discovery

curl -w '\n' -XGET http://localhost:9130/_/discovery/modules | jq '.[] | .srvcId + "/" + .instId' > dockerps.sh

sort dockerps.sh > dockerps.todelete.sh

This list should still contain the module versions of the old release:

=> backend modules (mod-*) of the old release (61 modules) + backend modules of the new release (62 = 65 - 3 which are the same in the old release) + 5 Edge modules =128 modules.

Of those 128 modules, 58 have now to be undeployed:

Undeploy all R2 modules (which are still running), except for at least one instance of mod-service-interaction:1.0.0, mod-graphql:1.9.0 and mod-z3950-2.4.0 (because they are on the same version in R2 and R3).

If you have deployed mod-service-interaction:1.0.0, mod-graphql:1.9.0 and mod-z3950-2.4.0 twice and want to undeploy one instance of them now, you will need to undeploy 61 modules.

Compare the list with list of R3 modules

Compare the list dockerps.todelete.sh with the list ~/platform-complete/okapi-install.sh (the R3 backend modules). First, sort this list:

sort okapi-install.json > okapi-install.json.sorted

Now, throw out all modules which are in okapi-install.json.sorted out of the list dockerps.todelete.sh .

This should leave you with a list of 58 (or 61) modules which are to be deleted now. If you decided to delete one of the instances of those modules which have been deployed twice, you should now have a list of 61 modules.

Edit dockerps.todelete.sh once again to make each line look like this (e.g. for mod-agreements; i.e. prepend “curl -w ‘\n’ -D - -XDELETE http://localhost:9130/_/discovery/modules/” to each line) :

curl -w '\n' -D - -XDELETE http://localhost:9130/_/discovery/modules/mod-agreements-4.1.1/<instId>

Finally, remove all unused modules

Add a line #!/bin/bash at the top of your delete script, make the script executable and then execute your delete script:

./dockerps.todelete.sh

This will be acknowledged by an “HTTP/1.1 204 No Content” for each module.

Now, finally once again get a list of the deployed backend modules:

curl -w '\n' -D - http://localhost:9130/_/discovery/modules | grep srvcId | wc

65

This should only contain the module versions of the new release now : 65 backend modules of R3-2021.

Compare this with the number of your running docker containers:

docker ps --all | grep "mod-" | wc

65

docker ps --all | wc

72

The following containers are running on your system, but do not contain backend modules:

Stripes

3x Elasticsearch

Kafka

Zookeper

In sum, these are 6 containers without backend modules.

Also subtract the header line (of “docker ps”), and you will arrive at 72 - 7 = 65 containers with backend modules (the figure of the first “wc”).

C’EST FINI !

Aftermath:

If you have undeployed one instance of mod-service-interaction:1.0.0, mod-graphql:1.9.0 and mod-z3950-2.4.0 each, do this now:

Disable those modules for your tenant and re-enable them. This will prevent Okapi form still trying to round robin with the deleted module instance. You also need to disable folio_dashboard-2.0.0 because it depends on mod-service-interaction-1.0.0 :

Disable the modules:

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d '[ { "id": "mod-graphql-1.9.0", "action": "disable" }, { "id": "mod-z3950-2.4.0", "action": "disable" }, { "id": "mod-service-interaction-1.0.0", "action": "disable" }, { "id": "folio_dashboard-2.0.0", "action": "disable" } ]' http://localhost:9130/_/proxy/tenants/diku/install?simulate=true\&preRelease=false

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d '[ { "id": "mod-graphql-1.9.0", "action": "disable" }, { "id": "mod-z3950-2.4.0", "action": "disable" }, { "id": "mod-service-interaction-1.0.0", "action": "disable" }, { "id": "folio_dashboard-2.0.0", "action": "disable" } ]' http://localhost:9130/_/proxy/tenants/diku/install?deploy=false\&preRelease=false\&tenantParameters=loadReference%3Dtrue%2CloadSample%3Dfalse

Re-enable the modules:

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d '[ { "id": "mod-graphql-1.9.0", "action": "enable" }, { "id": "mod-z3950-2.4.0", "action": "enable" }, { "id": "mod-service-interaction-1.0.0", "action": "enable" }, { "id": "folio_dashboard-2.0.0", "action": "enable" } ]' http://localhost:9130/_/proxy/tenants/diku/install?simulate=true\&preRelease=false

curl -w '\n' -D - -X POST -H "Content-type: application/json" -d '[ { "id": "mod-graphql-1.9.0", "action": "enable" }, { "id": "mod-z3950-2.4.0", "action": "enable" }, { "id": "mod-service-interaction-1.0.0", "action": "enable" }, { "id": "folio_dashboard-2.0.0", "action": "enable" } ]' http://localhost:9130/_/proxy/tenants/diku/install?deploy=false\&preRelease=false\&tenantParameters=loadReference%3Dtrue%2CloadSample%3Dfalse

1.6 - Customizations

Note: This content is currently in draft status.

Branding Stripes

Stripes has some basic branding configurations that are applied during the build process. In the file stripes.config.js, you can configure the logo and favicon of the tenant, and the CSS style of the main navigation and the login. These parameters can be set under the branding key at the end of the file. You can add the new images in the folder tenant-assets and link to them in the configuration file.

In the config key you can set the welcomeMessage shown after login, the platformName that is appended to the page title and is shown in browser tabs and browser bookmarks. Add a header with additional information to the Settings > Software versions page via the aboutInstallVersion and aboutInstallDate properties, the latter is automatically converted into the locale of the user opening the page, for example 10/30/2023 or 30.10.2023.

Take into account that these changes will take effect after you build the webpack for Stripes.

Example stripes.config.js customization:

module.exports = {

config: {

welcomeMessage: 'Welcome, the Future Of Libraries Is OPEN!',

platformName: 'FOLIO',

aboutInstallVersion: 'Poppy Hot Fix #2',

aboutInstallDate: '2023-10-30',

},

branding: {

logo: {

src: './tenant-assets/my-logo.png',

alt: 'my alt text',

},

favicon: {

src: './tenant-assets/my-favicon.ico',

},

style: {

mainNav: {

backgroundColor: "#036",

},

login: {

backgroundColor: "#fcb",

},

},

},

}

Okapi security

Make sure that you have secured Okapi before publishing it to the Internet. If you do not configure a super-tenant user and password for Okapi API, any user on the net could run privileged requests. The process of securing Okapi is performed with the secure-supertenant script and it is explained in the Single server deployment guides.

Additionally, it is recommended that you configure SSL certificates for Okapi in order to prevent data being sent as plain text over the Internet. Okapi does not have native HTTPS support, but you can set up a reverse proxy (e.g NGINX) that receives HTTPS requests and forwards them to Okapi. You can find more information about HTTPS on NGINX here. Also, if you are using an Ingress in Kubernetes, you can configure SSL certificates using Rancher. For more information on this process check here.

Email configuration

The module mod-email provides the role of delivering messages using SMTP server to send emails in FOLIO. It is used for sending notifications and restarting user passwords.

The mod-email module uses mod-configuration to get connection parameters. A detailed list of parameters can be found in the documentation of the module. The required configuration options are the following:

- EMAIL_SMTP_HOST

- EMAIL_SMTP_PORT

- EMAIL_USERNAME

- EMAIL_PASSWORD

- EMAIL_FROM

- EMAIL_SMTP_SSL

These parameters should be set in Okapi through POST requests using the name of the module: SMTP_SERVER. For example, the host configuration would look like this.

curl -X POST \

http://localhost:9130/configurations/entries \

-H 'Content-Type: application/json' \

-H 'X-Okapi-Tenant: <tenant>' \

-H 'x-okapi-token: <token>' \

-d

'{

"module": "SMTP_SERVER",

"configName": "smtp",

"code": "EMAIL_SMTP_HOST",

"description": "server smtp host",

"default": true,

"enabled": true,

"value": "smtp.googlemail.com"

}'

Take into account that this configuration is performed on a per tenant basis and the tenant ID is defined in the X-Okapi-Tenant header. Also, you have to be logged in as the superuser of the tenant and provide the access token in the header x-okapi-token. You can find an example of a login request here.

Once you have configured the mod-email module, you should configure other modules related to the email configuration. You should configure the users module and edge-sip2. You can find a Bash script that could be used to automate this process here. Make sure that you replace all of the environment variables required for the script.

Alternatively, if you deployed FOLIO on a Kubernetes cluster, you can create a Kubernetes Job for this task. This docker project https://github.com/folio-org/folio-install/tree/kube-rancher/alternative-install/kubernetes-rancher/TAMU/deploy-jobs/create-email can be built, pushed to the image registry and executed on the cluster similarly to other scripts mentioned in the Kubernetes deployment section.

Install and serve edge modules

These instructions have been written for a single server environment in which Okapi is running on localhost:9130.

If you do a test installation of FOLIO, you do not need to install any edge modules at all. Install an edge module in a test environment only if you want to test the edge module.

The Edge modules bridge the gap between some specific third-party services and FOLIO (e.g. RTAC, OAI-PMH). In these FOLIO reference environments, the set of edge services are accessed via port 8000. In this example, the edge-oai-pmh will be installed.

You can find more information about the Edge modules of FOLIO in the Wiki https://wiki.folio.org/display/FOLIOtips/Edge+APIs.

- Create institutional user. An institutional user must be created with appropriate permissions to use the edge module. You can use the included create-user.py to create a user and assign permissions.

python3 create-user.py -u instuser -p instpass \

--permissions oai-pmh.all --tenant diku \

--admin-user diku_admin --admin-password admin

If you need to specify an Okapi instance running somewhere other than http://localhost:9130, then add the –okapi-url flag to pass a different url. If more than one permission set needs to be assigned, then use a comma delimited list, i.e. –permissions edge-rtac.all,edge-oai-pmh.all.

- The institutional user is created for each tenant for the purposes of edge APIs. The credentials are stored in one of the secure stores and retrieved as needed by the edge API. See more information about secure stores. In this example, a basic EphemeralStore using an ephemeral.properties file which stores credentials in plain text. This is meant for development and demonstration purposes only.

sudo mkdir -p /etc/folio/edge

sudo vi /etc/folio/edge/edge-oai-pmh-ephemeral.properties

The ephemeral properties file should look like this.

secureStore.type=Ephemeral

# a comma separated list of tenants

tenants=diku

#######################################################

# For each tenant, the institutional user password...

#

# Note: this is intended for development purposes only

#######################################################

# format: tenant=username,password

diku=instuser,instpass

- Start edge module Docker containers.

You will need the version of the edge-modules available on Okapi for the tenant. You can run a CURL request to Okapi and get the version of the edge-oai-pmh module.

curl -s http://localhost:9130/_/proxy/tenants/diku/modules | jq -r '.[].id' | grep 'edge-'

- Set up a docker compose file in /etc/folio/edge/docker-compose.yml that defines each edge module that is to be run as a service. The compose file should look like this.

version: '2'

services:

edge-oai-pmh:

ports:

- "9700:8081"

image: folioorg/edge-oai-pmh:2.2.1

volumes:

- /etc/folio/edge:/mnt

command:

-"Dokapi_url=http://10.0.2.15:9130"

-"Dsecure_store_props=/mnt/edge-oai-pmh-ephemeral.properties"

restart: "always"

Make sure you use the private IP of the server for the Okapi URL.

- Start the edge module containers.

cd /etc/folio/edge

sudo docker-compose up -d

- Set up NGINX.

- Create a new virtual host configuration to proxy the edge modules. This needs to be done inside your Stripes container.

Log in to the stripes container, cd into the nginx configuration directory and create a new nginx configuration file there:

docker ps --all | grep stripes

docker exec -it <stripes container id> /bin/sh

cd /etc/nginx/conf.d

edit edge-oai.conf

Insert the following contents into the new file edge-oai.conf:

server {

listen 8130;

server_name <YOUR_SERVER_NAME>;

charset utf-8;

access_log /var/log/nginx/oai.access.log combined;

location /oai {

rewrite ^/oai/(.*) /$1 break;

proxy_pass http://<YOUR_SERVER_NAEM>:9700/;

}

}

YOUR_SERVER_NAME might be localhost. If you are working inside a Vagrant box, it is 10.0.2.15.

Exit the container and then restart the container:

docker restart <stripes container id>

You might also want to modify the Docker file that builds your Stripes container. So you will be able to re-build the container later or on some other machine.

Add the file edge-oai.conf (with the contents as above) to the directory $HOME/platform-complete/docker/.

Then add a line to the Dockerfile $HOME/platform-complete/docker/Dockerfile:

COPY docker/edge-oai.conf /etc/nginx/conf.d/edge-oai.conf

Commit your changes to a local (or personal or institutional) git repository.

Now, an OAI service is running on http://server:8130/oai .

- Follow this procedure to generate the API key for the tenant and institutional user that were configured in the previous sections. Currently, the edge modules are protected through API Keys.

cd ~

git clone https://github.com/folio-org/edge-common.git

cd edge-common

mvn package

java -jar target/edge-common-api-key-utils.jar -g -t diku -u instuser

This will return an API key that must be included in requests to edge modules. With this APIKey, you can test the edge module access. For instance, a test OAI request would look like this.

curl -s "http://localhost:8130/oai?apikey=APIKEY=&verb=Identify"

The specific method to construct a request for an edge module is documented in the developers website: https://dev.folio.org/source-code/map/ or you can refer to the github project of the edge module.